- Docs

- /

Performance Tuning Using Wait Statistics

06 Jun 2022 17593 views 0 minutes to read Contributors ![]()

![]()

![]()

![]()

Introduction

When a user application submits to SQL Server a request for data, the biggest element of SQL Server’s total response time would, ideally, be the CPU processing time. In other words, the time it takes the CPU to pull together the required data, slice, dice, and sort it according to the query specifications and send it back. However, in a busy database system, with hundreds or thousands of user requests competing for the limited resources of the database server, there will be times when a request is waiting to proceed, rather than actively processing. For example, Session A’s request may be waiting for Session B to release a lock on a resource to which it needs access.

Every time a request is forced to wait, SQL Server records the length of the wait, and the cause of the wait, a.k.a. the wait type, which generally indicates the resource on which the request was waiting, these are the wait statistics.

The SQLOS scheduler and thread scheduling

In order to understand wait statistics in SQL Server, and how SQL Server measures wait times, we need to delve a little into SQL Server’s scheduling model.

A scheduler can be described as a piece of software that coordinates the execution of different processes and administers its available resources. SQL Server has its own scheduling mechanism that is implemented in what we know as SQLOS. The reason behind this is that the Windows scheduler cannot satisfy what a relational database needs for working. Windows uses a preemptive scheduling mechanism and assigns a quantum of CPU time to every thread, when a thread consumes its quantum it is sent to a queue and other threads are granted execution.

In opposition, SQL Server uses a cooperative scheduling mechanism when threads can voluntary yield its quantum of time. This allows SQL Server to optimize CPU utilization, because when a thread is signaled for execution but is not ready to run it can yield its quantum of time in favor of other threads.

SQL Server has one scheduler per CPU core independently if it is a physical core or hyperthreaded. But the schedulers aren’t bound to a CPU unless you define an affinity mask. This means that a scheduler running on CPU 1, after a context switch can end up running on CPU 2.

A thread can be in one of three states, as exposed by the STATUS column of the sys.dm_exec_requests DMV:

- RUNNING – on the CPU.

- SUSPENDED – whenever a thread requires a resource, such as a page that is not in memory, the thread yields the CPU and moves onto an unordered waiter list, with a thread status of SUSPENDED, until the required resource is

- RUNNABLE – if a thread is not waiting on any resource, but is not currently on the CPU, for example because the scheduler to which it is assigned currently has another session’s thread running, it will be placed in a first-in-first-out (FIFO) queue called the runnable queue and the thread status will be

The SQLOS allows any given thread a small quantum of processing time (4 ms), before it must yield the CPU to another session’s thread. If, before its allotted quantum is complete, the running thread needs to request a resource, it moves to the waiter list. Once the resource is available, the thread moves to the back of the runnable queue and then, when its time comes, back onto the CPU. It then gets another 4 ms quantum of processing time. If it doesn’t have to wait for any resource in this time, but fails to complete its work, it yields the CPU anyway, moves to the back of the runnable queue, and awaits its next turn.

Using wait statistics for performance tuning

Analysis of wait statistics represents a very effective means to diagnose response times in our databases. In very simple terms, our requests either work, or they wait:

Response time = service time + wait time

First, it’s perfectly normal for threads to wait during task execution. In fact, in busy databases supporting a high degree of concurrent access, it’s almost inevitable that a thread for any particular task will have to wait for a resource at some point in its execution cycle, however briefly. If not, it may simply exceed its 4 ms quantum and wait in the runnable queue.

Second, wait statistics are not really a tool to use in isolation in order to diagnose a performance issue. They are best used in conjunction with other diagnostic tools, as a way to direct our tuning efforts and avoid wasting time.

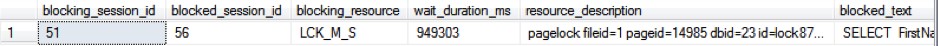

Investigating active-but-blocked requests using sys.dm_os_waiting_tasks

We can investigate the current, “real time” wait profile of a SQL Server instance, in terms of the requests and sessions associated with suspended threads, by querying the sys.dm_os_waiting_tasks DMV and joining it to various other useful views and functions. The sys.dm_os_waiting_tasks DMV reveals, amongst other columns, the:

- session_id of the session associated with the suspended thread.

- execution_context_id of the task associated with the suspended If there is more than one execution_context_id associated with a single session_id then SQL Server has parallelized the task.

- wait_type – the current wait type for the suspended thread.

- wait_duration_ms – length of time, in ms, that the suspended thread has waited for the current wait

- blocking_session_id – reveals the session_id associated with the blocking thread, if a thread is suspended due to blocking, for example because it is waiting to acquire a

- resource_description – for some types of resource, a description of the resource the suspended thread is waiting to acquire. For example, if it’s a locking wait, this reveals the lock level (page, table, and so on) and the ID of the locked

In such cases, we will likely observe significant locking waits (waits of type LCK_M_XX), indicating that a session is unable to proceed with its work until it can acquire a lock on the resource on which another session’s thread holds a conflicting lock. The most common causes of persistent blocking are poor code, which causes SQL Server to hold locks for far longer than necessary, lack of indexing, or an IO-intensive process that is blocking many other sessions for long periods.

Investigating blocking using the sys.dm_os_waiting_tasks DMV, using below query.

Analyzing historical wait statistics using sys.dm_os_wait_stats

We can also perform historical wait statistics analysis, using the data provided in sys.dm_os_wait_stats.

If a SQL Server instance has been running for quite a while and then is subjected to a significant change, such as adding an important new index, it’s worth considering clearing the old wait stats in order to prevent the old cumulative wait stats masking the impact of the change on the wait times.

The sys.dm_os_wait_stats DMV gives us a list of all the different types of waits that threads have encountered, the number of times they have waited on a resource to be available, and the amount of time waited. The following columns are available in the view (times are all in millisecond units):

- wait_type – the type of wait, which generally indicates the resource on which the worked threads waited (typical resource waits include lock, latch disk I/O waits, and so on).

- wait_time_ms – total, cumulative amount of time that threads have waited on the associated wait type; this value includes the time in the signal_wait_time_ms The value increments from the moment a task stops execution to wait for a resource, to the point it resumes execution.

- signal_wait_time_ms – the total, cumulative amount of time threads took to start executing after being signaled; this is time spent on the runnable

- waiting_tasks_count – the cumulative total number of waits that have occurred for the associated resource (wait_type).

- max_wait_time_ms – the maximum amount of time that a thread has been delayed, for a wait of this

There are many reasons why a certain task within SQL Server may need to wait, which means there are many possible values for the wait_type column.

Identifying high signal waits (CPU pressure)

If the signal wait time is a significant portion of the total wait time then it means that tasks are waiting a relatively long time to resume execution after the resources that they were waiting for became available. This can indicate either that there are lots of CPU-intensive queries, which may need optimizing, or that the server needs more CPU. The query below will provide a measure of how much of the total wait time is signal wait time.

If signal waits analysis indicates CPU pressure, then the sys.dm_os_schedulers DMV can help verify whether a SQL Server instance is currently CPU-bound. This DMV returns one row for each of the SQL Server schedulers and it lists the total number of tasks that are assigned to each scheduler, as well as the number that are runnable. Other tasks on the scheduler that are in the current_tasks_count but not the runnable_tasks_ count are ones that are either sleeping or waiting for a resource.

The filter for schedulers below 255 removes from the result set the numerous hidden schedulers in SQL Server, which are used for backups, the Dedicated Administrator Connection (DAC), and so on, and are not of interest when investigating general CPU load.

Identifying the primary resource waits

The primary use of the sys.dm_os_wait_stats DMV is to help us determine on which wait types and which resources SQL Server has spent the most time waiting, historically, while executing that server’s workload. As noted earlier, it’s important to focus this analysis on the most prevalent waits, and also filter out ‘benign’ waits that will occur frequently

on any database system and are not, except in extreme edge cases, the cause of performance issues.

Below query provides both total wait times as well as resource and signal wait times, filters out many benign waits, and performs some math to present very clearly the most significant waits, historically, on the instance.

Common wait types

In general, when examining wait statistics, either historically or for currently active requests, it’s wise to focus on the top waits, according to wait time, and look out for high wait times associated with the following specific wait types (some of which we’ll cover in more detail in subsequent sections).

• CXPACKET

Often indicates nothing more than that certain queries are executing with parallelism; CXPACKET waits in the server are not an immediate sign of problems, although they may be the symptom of another problem, associated with one of the other high value wait types on the instance.

• SOS_SCHEDULER_YIELD

The tasks executing in the system are yielding the scheduler, having exceeded their quantum, and are having to wait in the runnable queue for other tasks to execute. As discussed earlier, this wait type is most often associated with high signal wait rather than waits on a specific resource. If you observe this wait type persistently, investigate for other evidence that may confirm the server is under CPU pressure.

• THREADPOOL

A task had to wait to have a worker thread bound to it, in order to execute. This could be a sign of worker thread starvation, requiring an increase in the number of CPUs

in the server, to handle a highly concurrent workload, or it can be a sign of blocking, resulting in a large number of parallel tasks consuming the worker threads for long periods.

• LCK_*

These wait types signify that blocking is occurring in the system and that sessions have had to wait to acquire a lock, of a specific type, which was being held by another database session.

• PAGEIOLATCH_*, IO_COMPLETION, WRITELOG

These waits are commonly associated with disk I/O bottlenecks, though the root cause of the problem may be, and commonly is, a poorly performing query that is consuming excessive amounts of memory in the server, or simply insufficient memory for the buffer pool.

• PAGELATCH_*

Non-IO waits for latches on data pages in the buffer pool. A lot of times PAGELATCH_* waits are associated with allocation contention issues. One of the most well-known allocations issues associated with PAGELATCH_* waits occurs in tempdb when the a large number of objects are being created and destroyed in tempdb and the system experiences contention on the Shared Global Allocation Map (SGAM), Global Allocation Map (GAM), and Page Free Space (PFS) pages in the tempdb database.

• LATCH_*

These waits are associated with lightweight short-term synchronization objects that are used to protect access to internal caches, but not the buffer cache. These waits can indicate a range of problems, depending on the latch type. Determining the specific latch class that has the most accumulated wait time associated with it can be found by querying the sys.dm_os_latch_stats DMV.

• ASYNC_NETWORK_IO

This wait is often incorrectly attributed to a network bottleneck. In fact, the most common cause of this wait is a client application that is performing row-by-row processing of the data being streamed from SQL Server as a result set (client accepts one row, processes, accepts next row, and so on). Correcting this wait type generally requires changing the client side code so that it reads the result set as fast as possible, and then performs processing.

• OLEDB

Occurs when a thread makes a call to structure that uses the OLEDB provider and is waiting while it returns the data. A common cause of such waits is linked server

queries. However, for example, many DMVs use OLEDB internally, so frequent calls to these DMVs can cause these waits. DBCC checks also use OLEDB so it’s common to see this wait type when such checks run.

Wait Statistics baselines

Once we know what’s normal, then we can focus our tuning efforts, and we have a reference in the event that performance suddenly degrades. We can see trends over time and look out for worrying change in the pattern of waits. We can also map sudden changes to, for example, deployment of new code, or change in hardware configuration and so on.

In order to establish what is normal, then track changes over times, we need to establish baselines for our historical wait statistics data.

Clearing wait stats

By default, SQL Server clears the cumulative wait statistics for an instance from the sys.dm_os_wait_stats DMV upon instance restart. In addition, a DBA can clear the statistics manually using DBCC SQLPERF.

The queries below will reveal when wait statistics were last cleared by an instance restart, as well as if, and when, someone last cleared them manually. Simply compare the two values to see if wait statistics have been manually cleared since the last restart.

/*

check SQL Server start time - 2008 and higher

*/

/*

check SQL Server start time - 2005 and higher

*/

Collecting the data

In order to collect wait statistics, on a regular schedule, the first step is to create a table to hold the information, as shown below.

The second step is simply to schedule a query to run on a regular basis, which captures the wait information to this table.

We should capture wait statistics regularly, at least once a week or once a month. We could do so more frequently, perhaps daily, but remember that unless we are clearing the data regularly, they represent an aggregation of waits since the last restart. The longer the waits have been accumulating, the harder it may be to spot smaller changes in wait percentages.

Reviewing wait statistics data

We’ll want to review regularly the waits for each SQL Server instance. If you capture them once a week, then check on the trending once a week. The simple SELECT in Listing 11 retrieves from the dbo.WaitStats table all the data captured for the last 30 days.